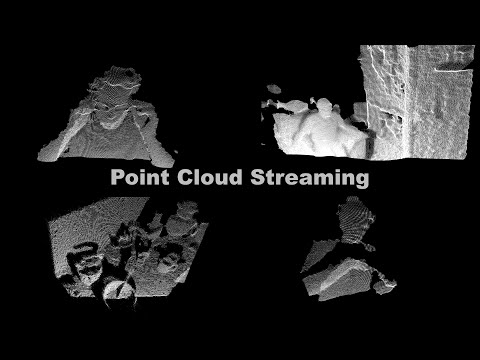

Example of video and point cloud streaming with hardware decoding and custom MLSP protocol:

- streaming video to UI element (RawImage)

- streaming video to scene element (anything with texture)

- streaming textured point clouds (Mesh)

This project contains native library plugin.

See how-it-works on wiki to understand the project.

See benchmarks on wiki for glass-to-glass latency.

See hardware-video-streaming for related projects.

See videos to understand point cloud streaming features:

| Point Cloud Streaming | Infrared Textured Point Cloud Streaming |

|---|---|

|

|

Currently Unix-like platforms only.

Unix-like operating systems (e.g. Linux), more info.

Tested on Ubuntu 18.04.

Tested on Intel Kaby Lake.

Intel VAAPI compatible hardware decoders (Quick Sync Video).

It is likely that H.264 through VAAPI will work also on AMD and NVIDIA.

Other technologies may also work but were not tested.

Intel VAAPI HEVC Main10 compatible hardware decoders, at least Intel Apollo Lake.

Other technologies may also work but were not tested.

All dependencies apart from FFmpeg are included as submodules, more info.

Works with system FFmpeg on Ubuntu 18.04 and doesn't on 16.04 (outdated FFmpeg and VAAPI ecosystem).

Tested on Ubuntu 18.04.

Requires Unity 2019.3 for technical reasons.

# update package repositories

sudo apt-get update

# get avcodec and avutil

sudo apt-get install ffmpeg libavcodec-dev libavutil-dev

# get compilers and make

sudo apt-get install build-essential

# get cmake - we need to specify libcurl4 for Ubuntu 18.04 dependencies problem

sudo apt-get install libcurl4 cmake

# get git

sudo apt-get install git

# clone the repository with *RECURSIVE* for submodules

git clone --recursive https://github.com/bmegli/unity-network-hardware-video-decoder.git

# build the plugin shared library

cd unity-network-hardware-video-decoder

cd PluginsSource

cd unhvd-native

mkdir build

cd build

cmake ..

make

# finally copy the native plugin library to Unity project

cp libunhvd.so ../../../Assets/Plugins/x86_64/libunhvd.soAssuming you are using VAAPI device.

- Open the project in Unity

- Choose and enable GameObject

- For Script define Configuration (

Port,Device) - For troubleshooting use programs in

PluginsSource/unhvd-native/build

| Element | Video | Point Clouds |

|---|---|---|

| GameObject | Canvas -> CameraView -> RawImage (UI) VideoQuad (scene) |

PointCloud |

| Script | RawImageVideoRenderer VideoRenderer |

PointCloudRenderer |

| Configuration | Port (network) Device (acceleration> script code |

Port (network) Device (acceleration) script code |

| Troubleshooting | unhvd-frame-example |

unhvd-cloud-example |

| Element | Video | Point Clouds |

|---|---|---|

| Sending side | NHVE nhve-stream-h264 RNHVE realsense-nhve-h264 |

RNHVE realsense-nhve-hevc RNHVE realsense-nhve-depth-ir RNHVE realsense-nhve-depth-color |

For a quick test you may use NHVE procedurally generated H.264 video.

If you have Realsense camera you may use RNHVE.

# assuming you build NHVE port is 9766, VAAPI device is /dev/dri/renderD128

# in NHVE build directory

./nhve-stream-h264 127.0.0.1 9766 10 /dev/dri/renderD128

# if everything went well you will see 10 seconds video (moving through grayscale).

# assuming you build RNHVE, port is 9766, VAAPI device is /dev/dri/renderD128

# in RNHVE build directory

./realsense-nhve-h264 127.0.0.1 9766 color 640 360 30 20 /dev/dri/renderD128

# if everything went well you will see 20 seconds video streamed from Realsense camera.Assuming Realsense D435 camera and 848x480.

# assuming you build RNHVE, port is 9768, VAAPI device is /dev/dri/renderD128

# in RNHVE build directory

./realsense-nhve-hevc 127.0.0.1 9768 depth 848 480 30 500 /dev/dri/renderD128

# for infrared textured point cloud

./realsense-nhve-depth-ir 127.0.0.1 9768 ir 848 480 30 500 /dev/dri/renderD128 8000000 1000000 0.0001

# for infrared rgb textured point cloud (D415/D455)

./realsense-nhve-depth-ir 127.0.0.1 9768 ir-rgb 848 480 30 500 /dev/dri/renderD128 8000000 1000000 0.0001

# for color textured point cloud, depth aligned

./realsense-nhve-depth-color 127.0.01 9768 depth 848 480 848 480 30 500 /dev/dri/renderD128 8000000 1000000 0.0001f

# for color textured point cloud, color aligned, color intrinsics required

./realsense-nhve-depth-color 127.0.01 9768 color 848 480 848 480 30 500 /dev/dri/renderD128 8000000 1000000 0.0001fIf you are using different Realsense device/resolution you will have to configure camera intrinsics.

See point clouds configuration for the details.

If you experience stuttering with artifacts increase UDP buffer size.

# here 10 x the the default value on my system

sudo sh -c "echo 2129920 > /proc/sys/net/core/rmem_max"

sudo sh -c "echo 2129920 > /proc/sys/net/core/rmem_default"Code in this repository and my dependencies are licensed under Mozilla Public License, v. 2.0

This is similiar to LGPL but more permissive:

- you can use it as LGPL in prioprietrary software

- unlike LGPL you may compile it statically with your code

Like in LGPL, if you modify the code, you have to make your changes available. Making a github fork with your changes satisfies those requirements perfectly.

Since you are linking to FFmpeg libraries consider also avcodec and avutil licensing.